Whatever it takes to understand a central banker - Embedding their words using neural networks.

Abstract

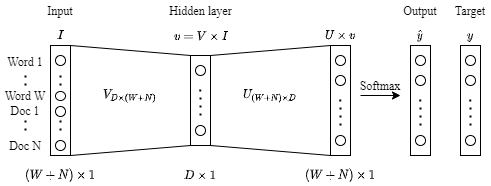

Dictionary approaches are at the forefront of current techniques for quantifying central bank communication. This paper proposes embeddings - a language model trained using machine learning techniques - to locate words and documents in a multidimensional vector space. To accomplish this, we gather a text corpus that is unparalleled in size and diversity in the central bank communication literature, as well as introduce a novel approach to text quantification from computational linguistics. Utilizing this novel text corpus of over 23,000 documents from over 130 central banks we are able to provide high quality text-representations -embeddings- for central banks. Finally, we demonstrate the applicability of embeddings in this paper by several examples in the fields of monetary policy surprises, financial uncertainty, and gender bias.